Tensorflow Serving with Docker. How to deploy ML models to production. | by Vijay Gupta | Towards Data Science

Is there a way to verify Tensorflow Serving is using GPUs on a GPU instance? · Issue #345 · tensorflow/serving · GitHub

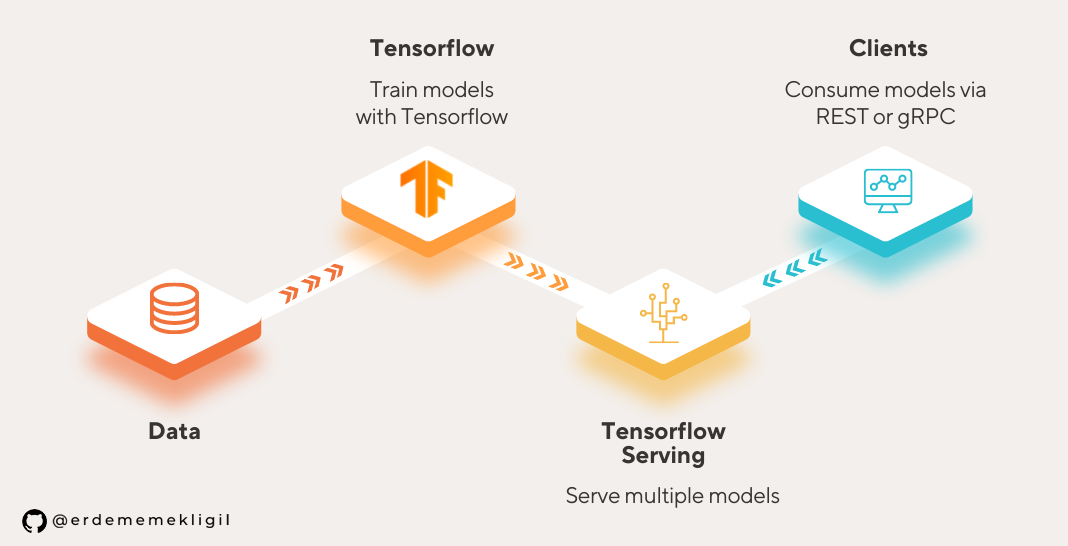

Serving an Image Classification Model with Tensorflow Serving | by Erdem Emekligil | Level Up Coding

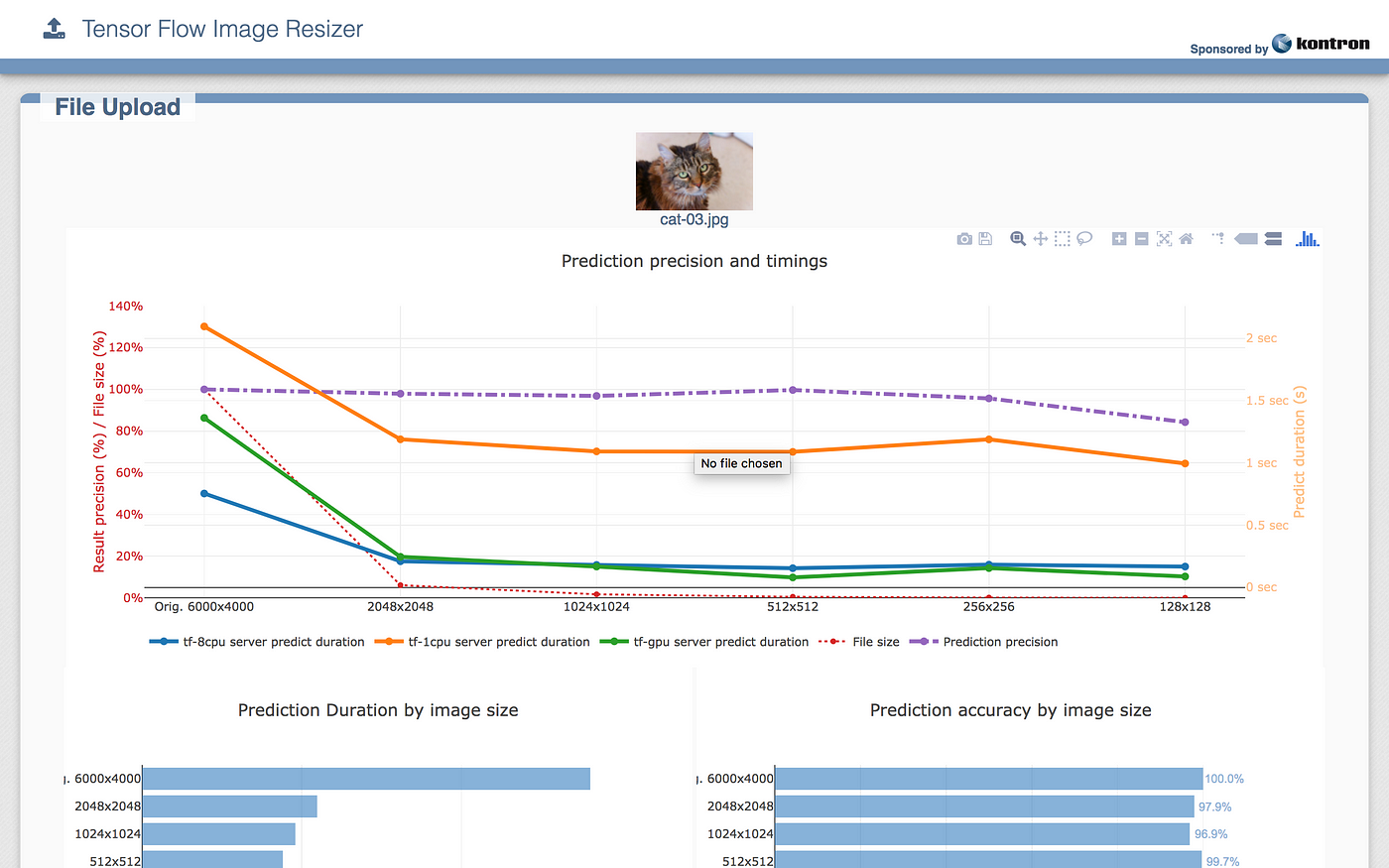

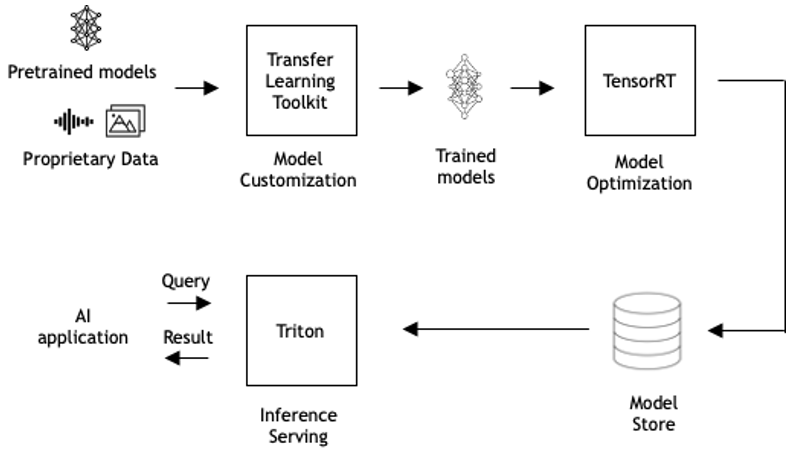

Running TensorFlow inference workloads with TensorRT5 and NVIDIA T4 GPU | Compute Engine Documentation | Google Cloud

GitHub - EsmeYi/tensorflow-serving-gpu: Serve a pre-trained model (Mask-RCNN, Faster-RCNN, SSD) on Tensorflow:Serving.

Deploying Keras models using TensorFlow Serving and Flask | by Himanshu Rawlani | Towards Data Science

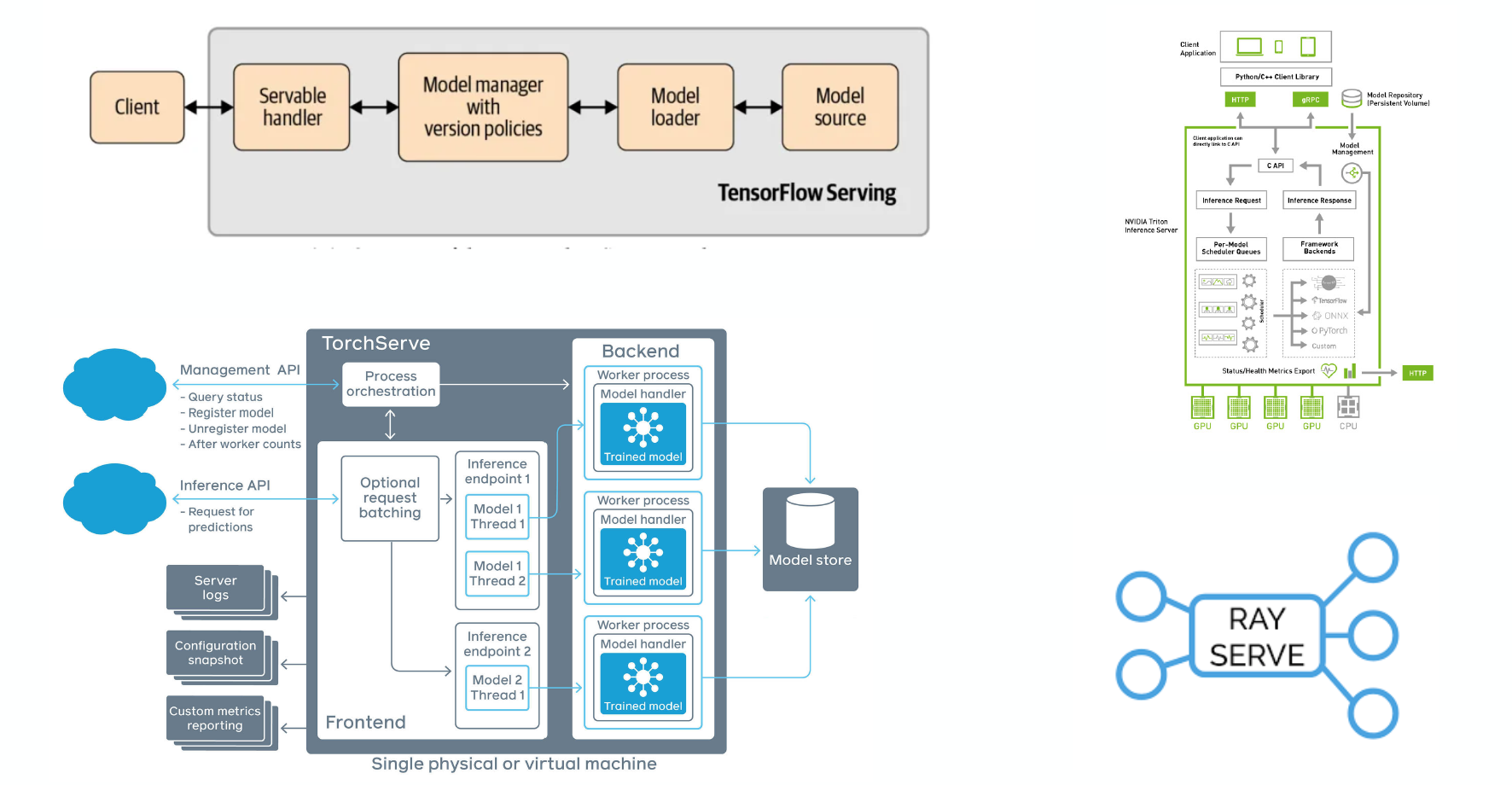

![PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/cff81b22995937e1bf9533c800b24209932e402a/3-Figure1-1.png)

![PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/cff81b22995937e1bf9533c800b24209932e402a/5-Figure2-1.png)